AI is here to stay

The term “artificial intelligence” (AI) was coined over 50 years ago at Dartmouth College for a workshop proposal that defined AI as “the coming science and engineering of making intelligent machines“. Since then, the field has had its ups and downs, but re-emerged into the limelight a decade ago with revolutionary advances in deep learning, and now the deployment of generative adversarial networks (GANs), variational autoencoders, and transformers.

These various techniques are now sufficiently sophisticated to meet Darmouth’s stated ambition of creating intelligent machines. Open AI is a good example; built on transformer-based generative AI language learning models, the OpenAI software suite, (ChatGPT, DAlle-E and Codex) is already paving the way for new drug discovery, replacing software engineers to code, conducting sophisticated conjoint-based market research, and dramatically improving customer service. Other recent studies also demonstrate significant productivity gains thanks to generative AI.

The essentials

With all the hype surrounding them, the many benefits of AI may seem suspect to some. More appropriately, Gartner’s anticipation cycle might suggest that we’re entering a phase of “exaggerated expectations”. Whatever the case, the many benefits of AI need to be balanced against new social, ethical and trust challenges.

As with any technology, its widespread use will only occur if it benefits society, rather than being used in dangerous or abusive ways. From beneficial AI to responsible AI, ethical AI, trustworthy AI, or explainable AI, terminological variants always remind us that AI needs fundamental trust to thrive.

Today, many governments and international public organizations have developed their own frameworks. Private institutions are also taking the lead. Google’s AI principles, for example, are “Be socially beneficial”, “Avoid creating or reinforcing AI biases”, “Be built and tested for safety” and “Be accountable to people”. SAP has set up an AI Ethics Steering Committee and an AI Ethics Advisory Group. But the key question now is how players translate AI principles into actual organizational practices within the company. After all, most big-name companies have faced serious problems with the use of AI, for example when Microsoft’s chatbot risks spreading hate speech or Amazon’s online recruitment favors a certain gender or race.

The AI journey

Even if putting these principles into practice is complex, the merit of companies succeeding in this operational translation will benefit both society and their business objectives.

I recently worked with a leading consultancy to assess how major global companies rate their AI journey – in particular, how they have implemented trustworthy organizational practices.

Our sample comprised over 1,500 companies, spread across 10 major countries, including the USA, China, India, EU member states, Brazil, South Africa, and beyond.

Here are the five main takeaways:

- Companies are not standing still: around 80% of them have launched some form of trustworthy AI.

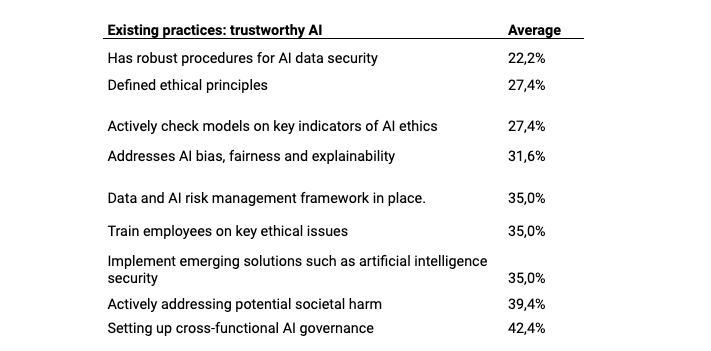

- However, companies rarely adopt a wide range of practices that form the backbone of trustworthy AI. On average, companies use 4 out of 10 organizational practice reviews, and only 2% of companies adopt all practices.

- None of these practices has yet become widespread (table 1).

- Country and sector appear to play a role in the degree of operationalization. Europe lags behind Asia (India and Japan), Canada is ahead of the USA, and high-tech is not ahead of other sectors.

- Operationalizing trustworthy AI is a top priority for around 30% of companies, though not necessarily to expand efforts, but rather to catch up with their peers.

4. Hacking the leaders

Nevertheless, if we compare the top 10% of companies with the 30% that have yet to implement trustworthy AI, we can find some hope. There is no visible correlation (yet) between responsible AI practices and AI ROI, whether in terms of revenues or investments made, or the profitability of AI projects. However, trustworthy AI is implemented, and with greater priority on other actions, the more AI use cases are exploited within large companies, and the more they have spent on AI.

Table 1: Trustworthy AI practices, 1500 of the world’s largest listed companies

The 2023 edition of the Network Readiness Index, dedicated to the theme of trust in technology and the network society, will launch on November 20th with a hybrid event at Saïd Business School, University of Oxford. Register and learn more using this link.

For more information about the Network Readiness Index, visit https://networkreadinessindex.org/

Jacques Bughin is the CEO of Machaon Advisory and a professor of Management. He retired from McKinsey as senior partner and director of the McKinsey Global Institute. He advises Antler and Fortino Capital, two major VC/PE firms, and serves on the board of multiple companies. He has served as a member of the Portulans Institute Advisory Board since 2019.